Product Parameters

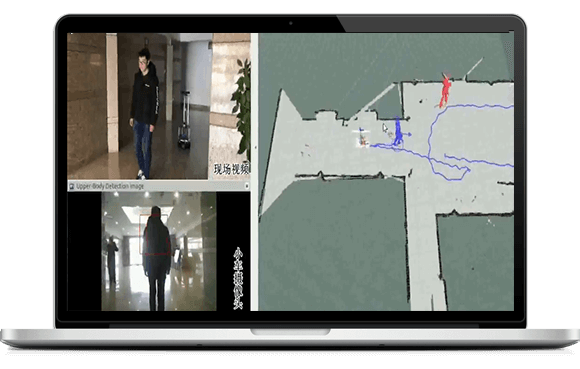

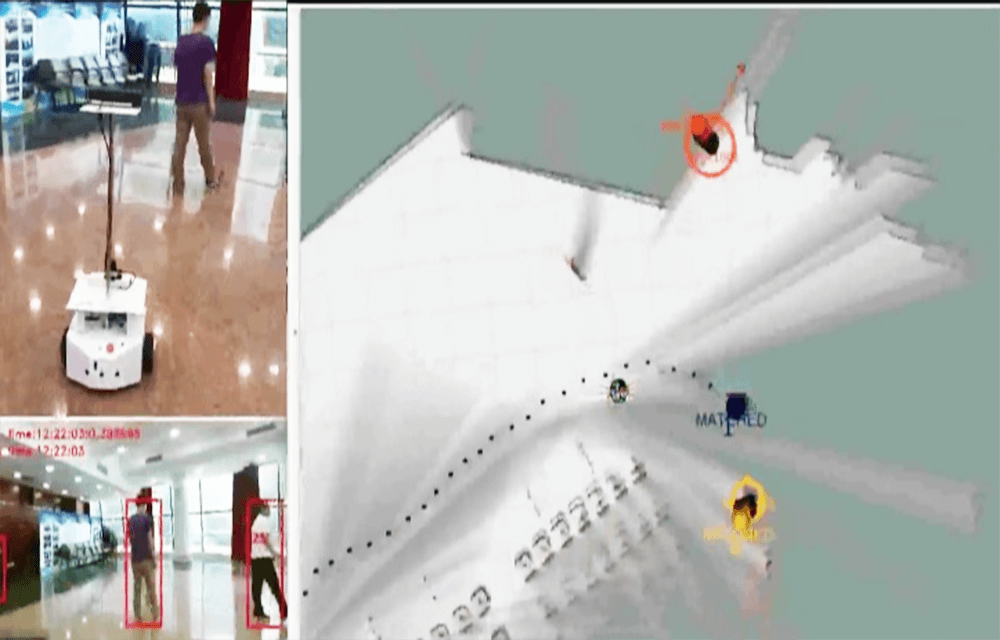

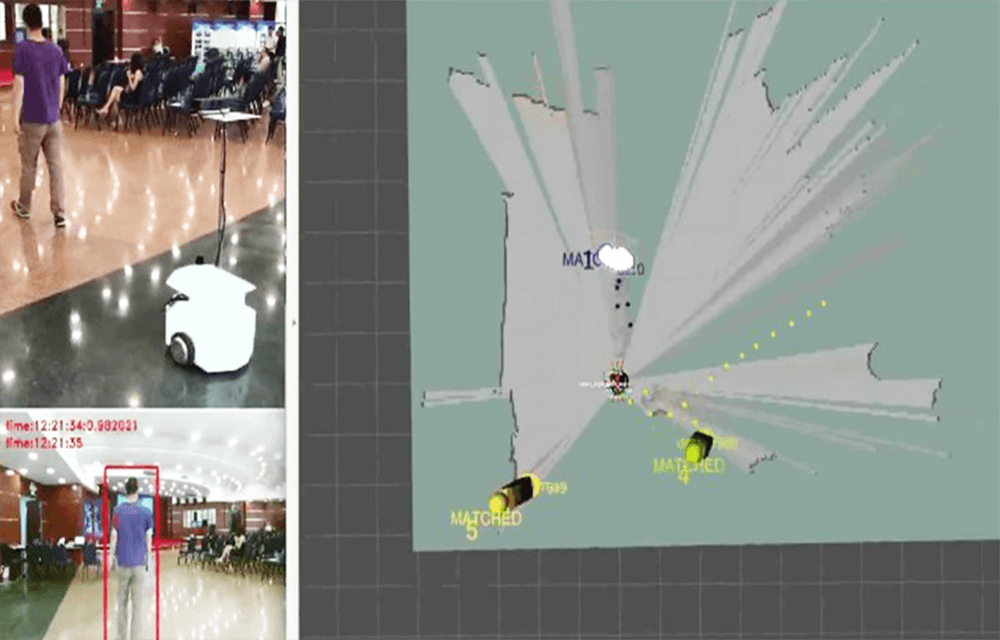

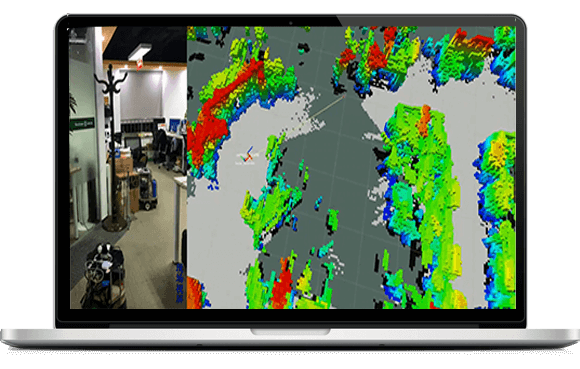

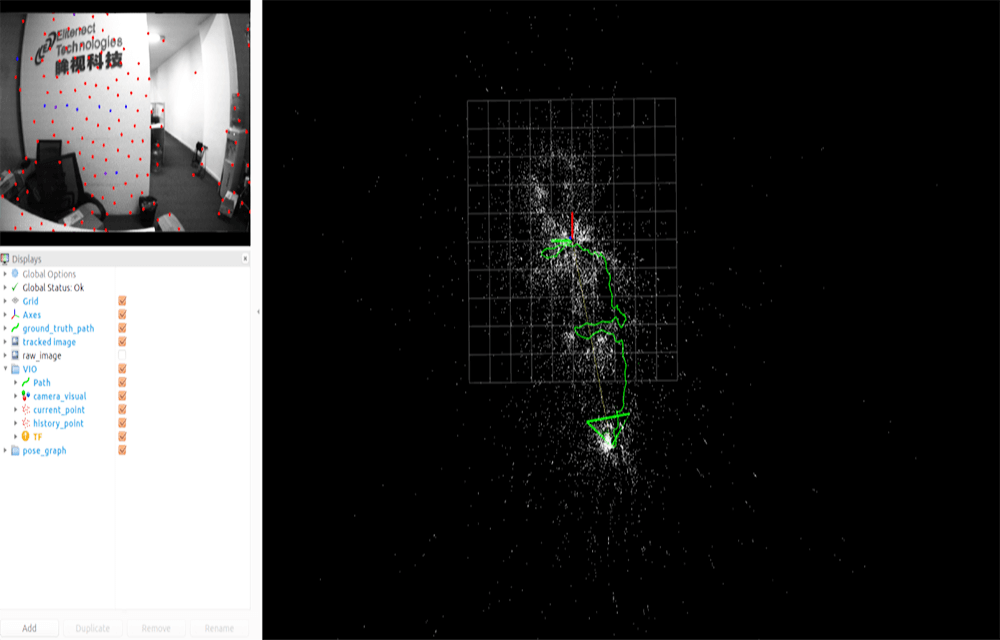

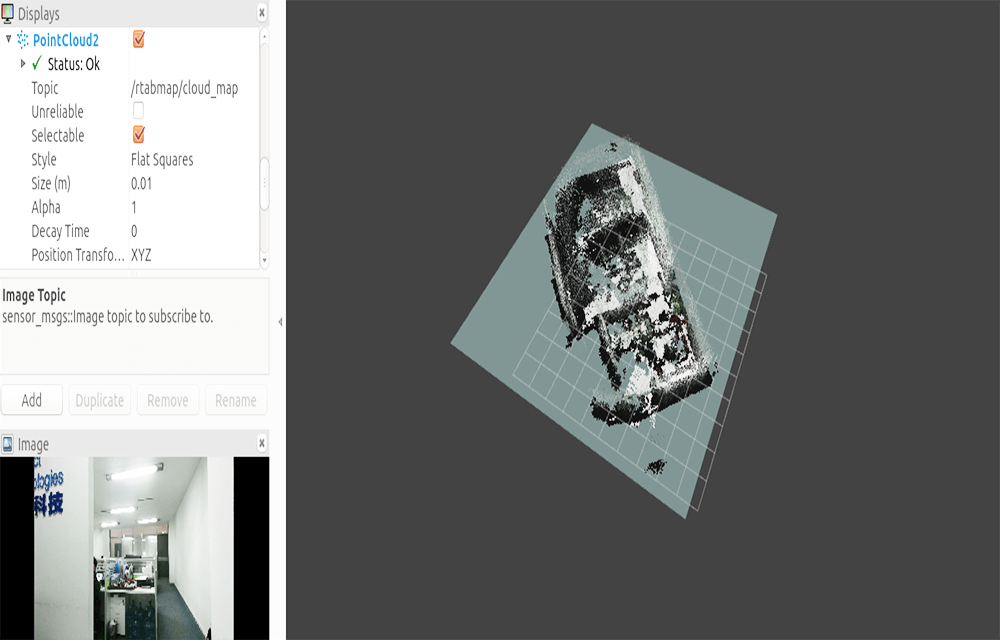

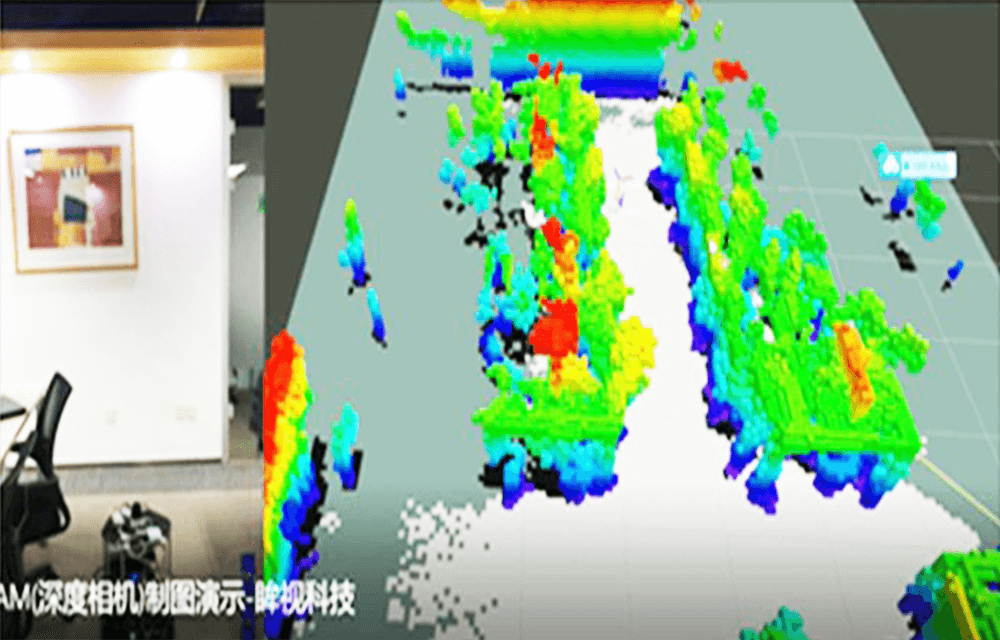

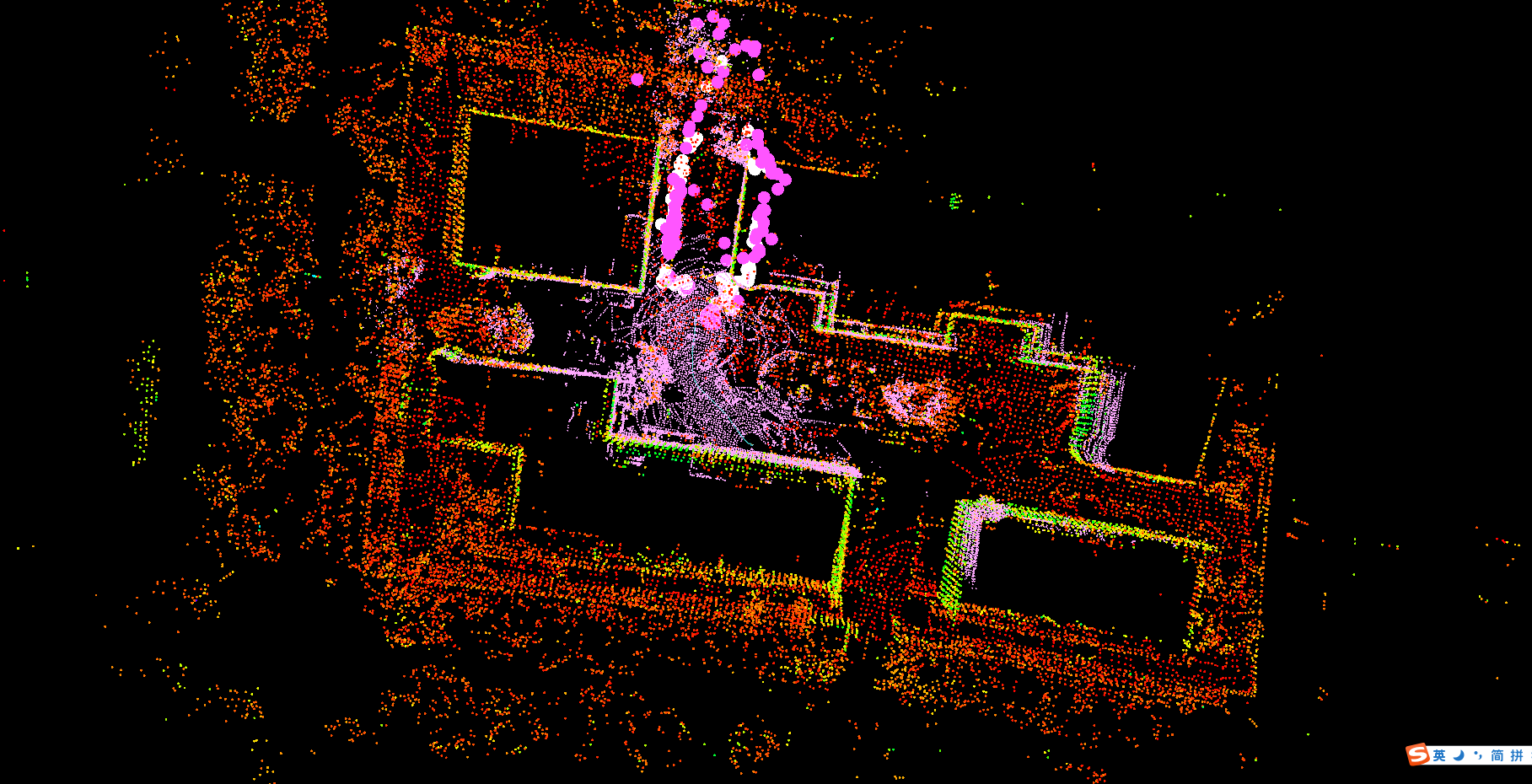

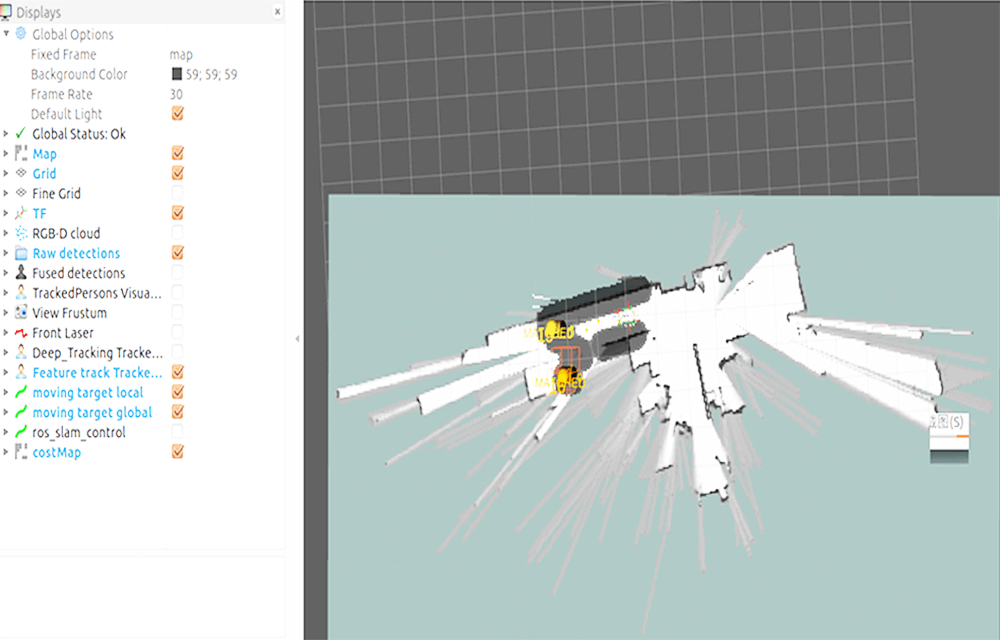

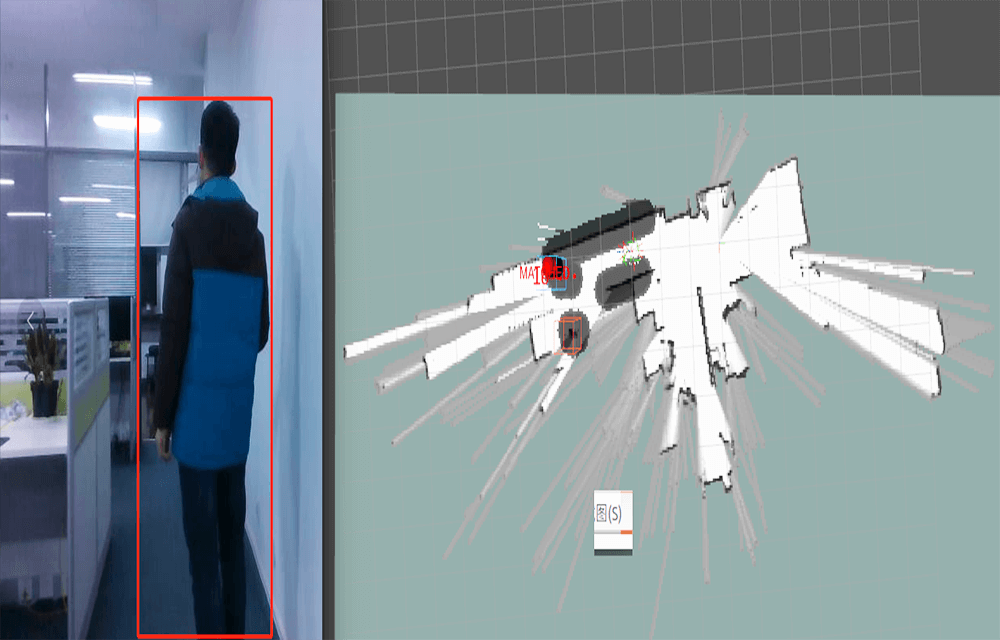

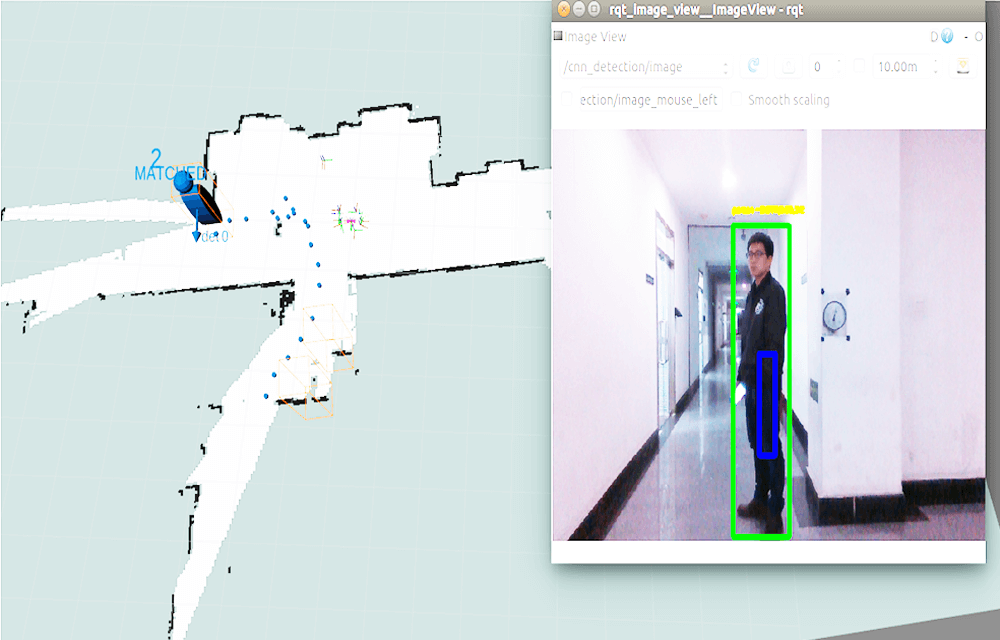

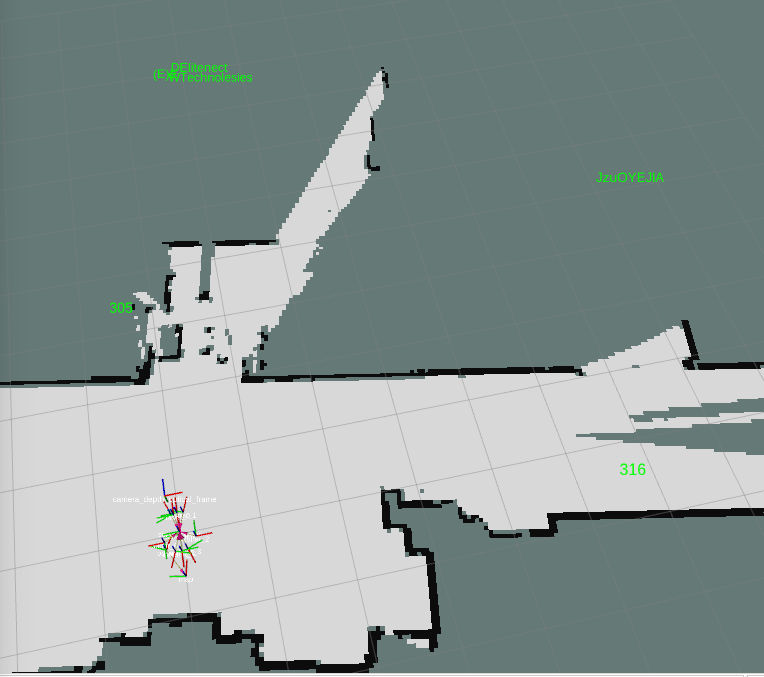

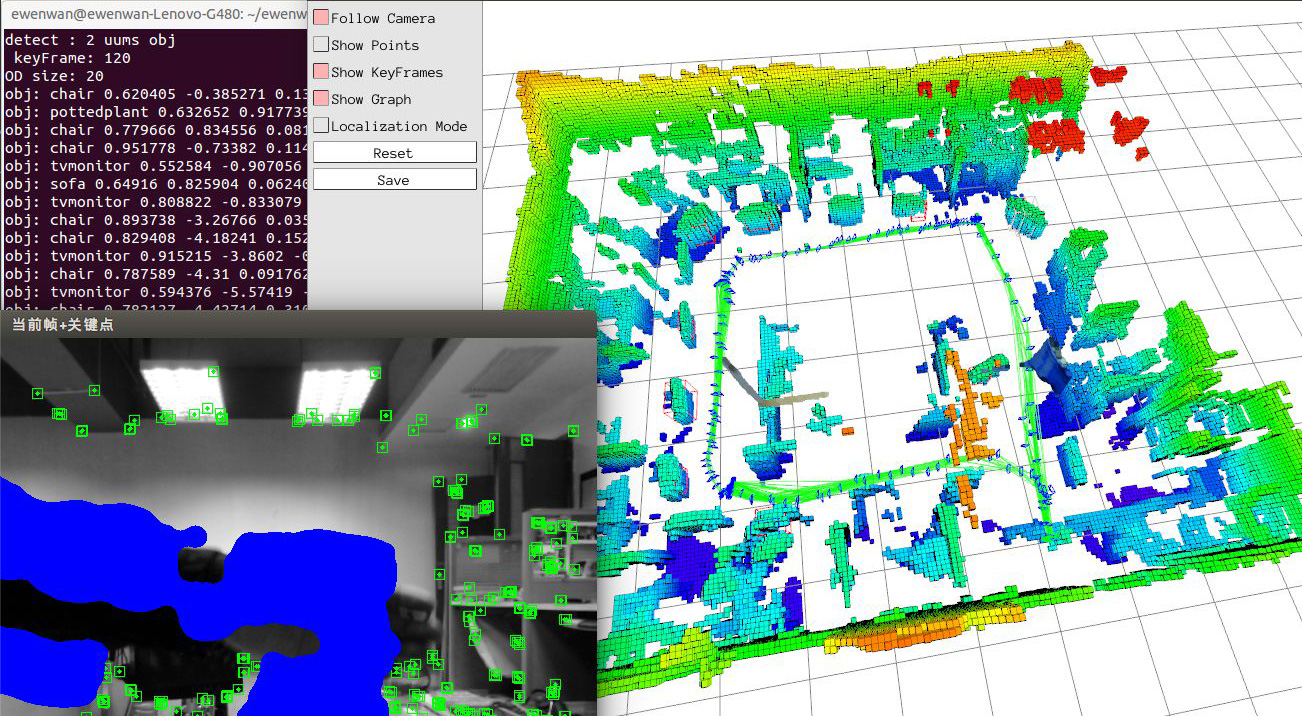

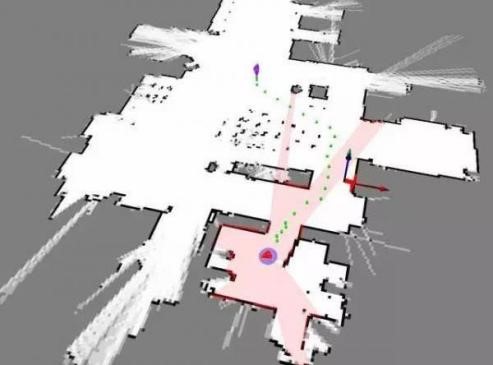

The SLAM solution of Eyesight Technology supports both LiDAR SLAM and visual SLAM. At the same time, Eyeview Technology supports the SLAM solution of laser radar+visual fusion, which means using both laser radar and camera for positioning and navigation in SLAM.

Lidar and camera belong to different sensor categories, and they have their own advantages and disadvantages:

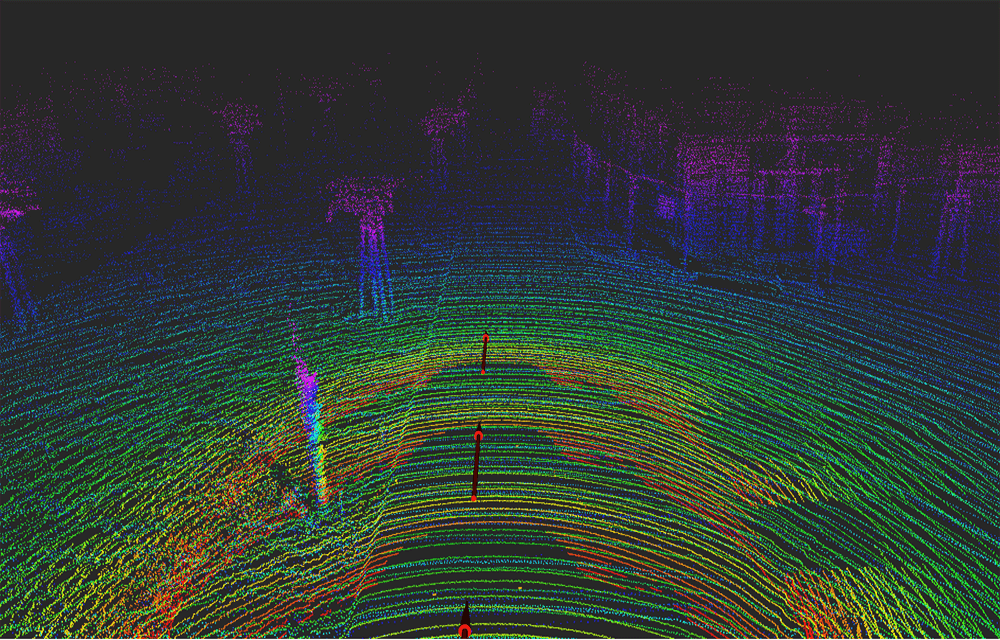

The advantage of LiDAR is that it can provide accurate distance information, and because LiDAR emits light on its own, it can work in completely dark environments. The disadvantage of LiDAR is that the sampling points are relatively sparse (such as some single line LiDAR with only 360 measurement points per scan), and the SLAM method of LiDAR depends on aligning geometric point clouds, which may cause SLAM failure in geometrically degraded scenes (such as long and straight tunnels).

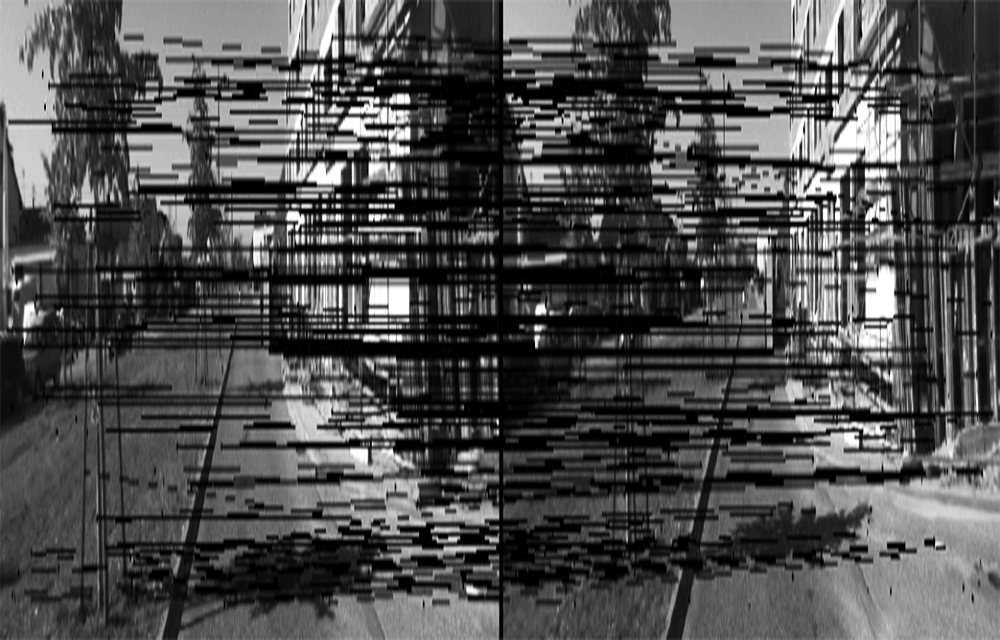

The camera can provide rich environmental information (RGB) and the information is relatively dense (for example, a regular monocular camera can provide image information of 640 * 480 pixels). The disadvantage of cameras is that they are sensitive to environmental lighting conditions, such as not working in dark environments. At the same time, in environments without distinctive features such as cloudy days, snow on the ground, and all white walls, visual based SLAM can also face difficulties.